TCP vs UDP – Which Is Better for Streaming?

Streaming is an innovative way to connect with your audience, especially as video is one of the most effective, preferred mediums for online consumers. It is an essential part of of digital communication, whether for entertainment, education, or business. With video content dominating online platforms, choosing the right streaming protocol is crucial for delivering high-quality, seamless playback.

While setting up a stream might seem simple, the underlying technology plays a significant role in performance. A key decision is whether to use TCP or UDP for streaming, as each protocol affects factors like speed, reliability, and latency. Understanding the difference between TCP and UDP will help you optimise your stream for the best viewing experience.

So, is video streaming TCP or UDP, and which protocol is better for your needs? Let’s break down the key differences and find out which one works best for UDP streaming, live broadcasts, and on-demand video services.

Table of Contents:

- What is a Protocol?

- What is TCP?

- What is UDP?

- What is QUIC?

- What is RIST?

- What is SRT?

- What is WebRTC?

- What is LL-HLS?

- What is E-RTMP?

- What is HESP?

- TCP vs UDP: Which Is Better for Streaming?

- Future Trends and Considerations in Streaming Protocols

- FAQs

- Conclusion

What is a Protocol?

Streaming protocols are considered one of the fundamental building blocks of professional broadcasting. They define how data is transmitted over the internet, ensuring seamless content delivery to users.

A protocol is a set of established rules and standards that govern data transmission. These protocols break files into smaller components for efficient transport and then reassemble them at the user’s end for smooth playback. For example, you’re able to read this article right now thanks to the HTTPS protocol facilitating communication between your device and the internet.

However, when it comes to video streaming, two key protocols dominate: TCP and UDP. These protocols have been used interchangeably over the years, but each serves distinct purposes. Before comparing TCP vs UDP for streaming, let’s first take a closer look at some of the emerging protocols

Emergence of Modern Protocols

While TCP and UDP remain the foundation of internet communication, new transport protocols have emerged to address their limitations, particularly in video streaming. These modern protocols optimise reliability, latency, and efficiency, making them ideal for various business applications.

The key emerging protocols include the following:

- QUIC (Quick UDP Internet Connections)

- RIST (Reliable Internet Stream Transport)

- SRT (Secure Reliable Transport)

- WebRTC (Web Real-Time Communication)

- LL-HLS (Low Latency HLS)

- Enhanced RTMP (E-RTMP)

- High Efficiency Streaming Protocol (HESP)

Hybrid Streaming Approaches

Many modern businesses are leveraging hybrid streaming strategies, combining UDP’s speed with TCP’s reliability to optimise performance. This allows services to dynamically switch between protocols based on network conditions, ensuring a smooth viewer experience. For example:

- Live sports streaming platforms use SRT or RIST to achieve real-time delivery while ensuring minimal packet loss.

- Corporate webinars and video conferencing rely on WebRTC for low-latency interaction, often using TCP fallback for stability.

- OTT platforms (like Netflix and Disney+) primarily use TCP for video streaming but are experimenting with QUIC for faster connections.

What is TCP?

Transmission Control Protocol (TCP) is a fundamental protocol for exchanging data across computer networks. It establishes reliable, two-way communication between devices, ensuring that data is transmitted accurately and in order. Unlike UDP streaming, which prioritises speed over reliability, TCP streaming incorporates mechanisms to prevent packet loss, duplication, and corruption, making it the preferred choice for applications where data integrity is critica

Alongside UDP and SCTP, TCP is a core component of the IP suite. It plays a vital role in applications where reliability is more important than speed, such as file transfers, email communication, and web browsing. TCP’s structured approach ensures that data is received as intended, making it the backbone of many internet-based services.

If you’re wondering what is the difference between TCP and UDP, the key distinction lies in reliability versus speed. TCP ensures that all data packets arrive correctly and in sequence, while UDP is designed for faster transmissions with minimal error correction, making it ideal for real-time applications like UDP video streaming and video conferencing.

How Does TCP Work?

TCP enables bidirectional communication, meaning both systems involved in the connection can send and receive data simultaneously. This process is similar to a telephone conversation, where both parties actively exchange information.

TCP sends data in packets (also called segments), managing their flow and integrity throughout the transmission. It establishes and terminates connections using a process called the TCP handshake. This automated negotiation ensures that both communicating devices agree on connection parameters before data transfer begins.

To establish a valid TCP connection, both endpoints must have a unique IP address to identify the device and an assigned port number to direct data to the correct application. In this setup, the IP address acts as the unique identifier, while the port number ensures data reaches the appropriate application (e.g., a web browser or email client).

Real-Life Examples of TCP In Action

To better understand what TCP is all about, here are some classic use cases.

Text-Based Communication

Reliable message delivery is essential in text-based applications, where missing or out-of-order data could alter the meaning of a conversation. This is why TCP is used in services like:

- iMessage

- Instagram Direct Messages

File Transfers

When transferring files, ensuring data integrity is crucial. TCP guarantees that files arrive complete and in the correct order through two dedicated pathways: a control connection and a data connection.

Web Browsing (HTTP/HTTPS)

The TCP protocol is used to access web pages by handling error correction, flow control, retransmission of lost packets, and ensuring proper data sequencing. For example, when you load a webpage on Netflix or YouTube, TCP ensures that each part of the page loads correctly.

Email Transmission (SMTP)

Email services rely on TCP to ensure messages are sent and received reliably. The SMTP protocol (Simple Mail Transfer Protocol) uses TCP to establish a connection with an email server, ensuring secure and complete message delivery.

Examples of email providers using TCP include Gmail, Outlook, and Yahoo Mail.

What is UDP?

User Datagram Protocol (UDP) is a core communication protocol known for its low latency and bandwidth efficiency. Despite having a reputation for being less reliable than TCP, UDP is integral to streaming strategies due to its ability to minimise delays. This makes it an excellent choice for real-time applications such as live streaming, video conferencing, and gaming, where immediate data transmission is prioritised over perfect accuracy.

So, what is UDP streaming used for? Unlike TCP, UDP speeds up communications by transmitting data without first establishing a connection. This allows for rapid data transfer, which can be beneficial or problematic depending on the application. The downside is that UDP lacks built-in error correction, meaning packets can be lost or received out of order. However, for time-sensitive content, such as UDP live streaming or online gaming, speed takes precedence over perfection.

How Does UDP Work?

UDP streaming is particularly useful for time-sensitive transmissions where low latency is essential. UDP operates by sending small data packets, known as datagrams, directly to target computers without verifying their arrival order or integrity. This streamlined approach enables faster data exchange, making UDP an optimal choice for applications that require minimal delay.

While often compared to TCP, UDP differs significantly in its transmission model. TCP ensures data integrity by establishing a connection and using error-checking mechanisms. In contrast, UDP is a “fire-and-forget” protocol—data is sent without confirmation of receipt. Because of this, packets may be lost without warning. However, applications can implement additional protocols, such as RTP or RTCP, to compensate for these shortcomings and enhance reliability.

UDP works using IP. It relies on the devices in between the sending and receiving systems correctly navigating data through its intended locations to the source. What usually happens is an application will await data sent via UDP packets and if it doesn’t receive a reply within a certain time frame it will either resend it or stop trying.

Despite its reputation for being unreliable, UDP has several advantages. It reduces overhead, making it a practical option for users with limited bandwidth or slower internet connections. Many video streaming services, including IPTV and live broadcasts, use UDP streaming protocols to maintain smooth playback without buffering delays.

Real-Life Example of UDP Protocol in Action

Understanding UDP’s technical workings is one thing, but seeing it in real-world applications can provide better clarity. Here are some practical examples:

Video Conferencing

Online video meetings have become essential for business and personal communication. Platforms like Zoom, Skype, and Google Meet rely on UDP for real-time, uninterrupted conversations. Since video conferencing prioritises speed over perfect packet delivery, UDP ensures there is no streaming delay so you can talk with people and not have to worry about a sketchy connection.

Voice Over IP (VoIP)

Many apps enable you to configure voice clips, calls, and other audio interactivity. These use the IP protocol and more specifically UDP to convert voices into digital data transferred over a network to a corresponding device.

VoIP services convert voice data into digital packets and transmit them over the internet using UDP. Apps like WhatsApp, Viber, and Google Hangouts use this method to enable smooth voice communication. The minimal delay provided by UDP ensures that conversations feel natural and instantaneous.

Domain Name Systems (DNS)

UDP is used in DNS requests to resolve domain names into IP addresses quickly. It enables the end user to access a suitable server when they enter a domain into their web browser. Because DNS queries involve small data packets that require fast responses, UDP’s speed advantage makes it the preferred choice over TCP for these transactions.

Live Streaming

Live streaming services often use UDP for efficient data transmission. UDP video streaming is especially beneficial for real-time broadcasts, where small data losses are acceptable compared to potential buffering. Many platforms use UDP alongside other protocols, such as RTP, to facilitate uninterrupted streaming.

What is QUIC?

QUIC (Quick UDP Internet Connections) is a transport layer network protocol developed by Google to improve performance over TCP. It is built on top of UDP but incorporates many of TCP’s reliability features while reducing latency.

It establishes a connection faster than TCP by reducing the number of round trips required for handshake processes. It also includes built-in encryption similar to TLS, making it secure. Unlike TCP, QUIC can recover lost packets without retransmitting entire streams, enhancing speed and efficiency.

Real-Life Examples of QUIC

- Google Chrome & YouTube: Google uses QUIC to accelerate loading times and reduce buffering.

- Facebook & Instagram: These platforms leverage QUIC for faster data transfers.

- Netflix: QUIC is increasingly being adopted to enhance streaming performance, improving load times and reducing interruptions.

What is RIST?

RIST (Reliable Internet Stream Transport) is a low-latency video transport protocol designed to deliver high-quality streams over unreliable networks.

It employs error correction mechanisms to ensure smooth delivery of video packets over unstable networks. It uses retransmission and adaptive bitrate techniques to maintain stream integrity.

Real-Life Examples of RIST

- Live Broadcasts: Used by television networks for secure, high-quality live video feeds.

- Remote Production: Broadcasters employ RIST to transfer high-resolution video feeds from on-location shoots.

What is SRT?

SRT (Secure Reliable Transport) is an open-source video transport protocol designed to optimize live streaming over unpredictable networks, improving reliability and security.

This protocol uses ARQ (Automatic Repeat reQuest) and FEC (Forward Error Correction) to minimize packet loss and jitter. It also provides end-to-end encryption, making it a secure alternative to traditional streaming protocols.

Real-Life Examples of SRT

- ESPN & Sky Sports: Utilized for broadcasting sports events with low latency.

- Cloud-Based Video Platforms: Many streaming services implement SRT to ensure stable and secure content delivery.

- IPTV Services: Used in IPTV UDP streaming for enhanced performance.

What is WebRTC?

WebRTC (Web Real-Time Communication) is an open-source framework that enables real-time peer-to-peer communication for video and voice calls over web browsers without requiring additional plugins.

It’s a protocol that facilitates direct communication between devices using UDP, enabling low-latency data transfer. It dynamically adjusts to network conditions to ensure uninterrupted audio and video streams.

Real-Life Examples of WebRTC

- Zoom & Google Meet: WebRTC is the backbone of many video conferencing applications.

- Online Gaming: Enables real-time voice and video chat in multiplayer games.

- Customer Support: Used in live chat and video call support systems.

What is LL-HLS?

LL-HLS (Low Latency HTTP Live Streaming) is an extension of Apple’s HLS protocol designed to minimize latency in live video streaming.

It reduces buffering times by breaking video segments into smaller chunks and delivering them in near real-time. Unlike traditional HLS, which introduces delays due to buffering, LL-HLS ensures seamless streaming.

Real-Life Examples of LL-HLS

- Apple TV & iOS Apps: Many Apple applications utilize LL-HLS for smoother live streaming experiences.

- Twitch & YouTube Live: LL-HLS enhances interactive live streaming, reducing latency for real-time audience engagement.

- Sports Streaming: Platforms broadcasting live sports use LL-HLS for instant playback without delays.

What is E-RTMP?

Enhanced RTMP (E-RTMP) is an upgraded version of the legacy RTMP, designed to address modern streaming needs. While RTMP was a standard for live streaming, its limitations in supporting newer codecs and latency-sensitive applications led to the need for an improved protocol.

This upgraded version builds upon traditional RTMP but incorporates support for contemporary codecs such as HEVC (H.265) and AV1, making it more efficient in delivering high-quality video at lower bitrates. It also introduces nanosecond timestamp precision, allowing better synchronization across multiple streaming devices. Additionally, E-RTMP supports multitrack audio and video, ensuring greater flexibility for live broadcasts with multiple language tracks or alternative camera angles.

Real-Life Applications of E-RTMP

- Live Sports & Esports Streaming: Ensures ultra-low latency for real-time audience engagement.

- Corporate Webinars & Virtual Events: Supports simultaneous video tracks for multi-camera live events.

- Social Media Live Streaming: Platforms requiring modern codecs and high efficiency benefit from E-RTMP’s improved capabilities.

What is HESP?

The High Efficiency Streaming Protocol (HESP) is an HTTP-based adaptive bitrate streaming protocol that delivers sub-second latency while maintaining high-quality streaming performance. HESP is designed to compete with traditional protocols such as Low Latency HLS and MPEG-DASH, offering significant improvements in stream startup times and channel switching speed.

HESP uses two distinct streams: an initialization stream and a continuation stream. The initialization stream ensures an almost instantaneous startup, while the continuation stream maintains smooth playback. Unlike traditional HTTP-based streaming protocols, HESP minimizes buffering delays by reducing the dependency on large media segments, thereby achieving sub-second latency.

Real-Life Applications of HESP

- Interactive Streaming & Gaming: Ideal for real-time audience interaction where ultra-low latency is crucial.

- IPTV & Broadcast TV: Enables seamless channel switching with minimal delays, improving user experience.

- Telemedicine & Remote Monitoring: Provides high-speed video transmission essential for real-time diagnostics and consultations.

TCP vs UDP – Which Is Better for Streaming?

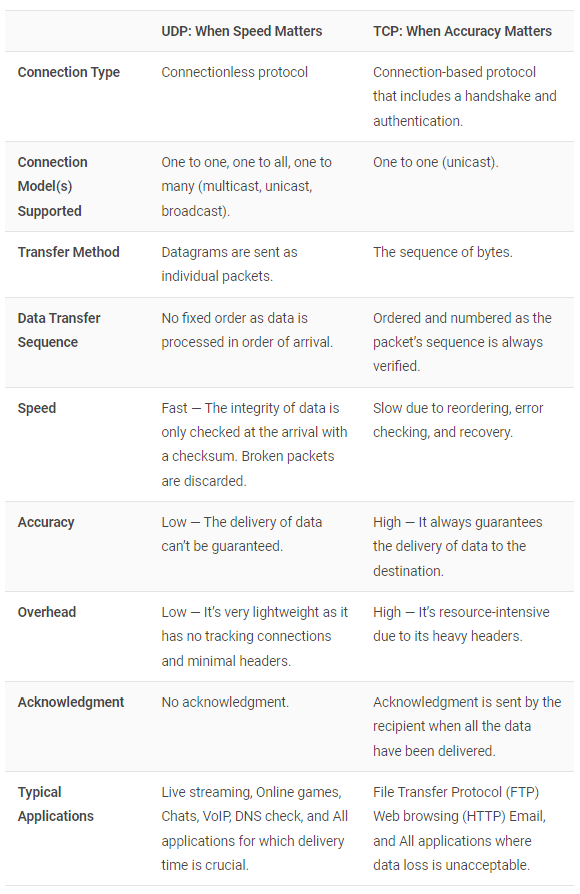

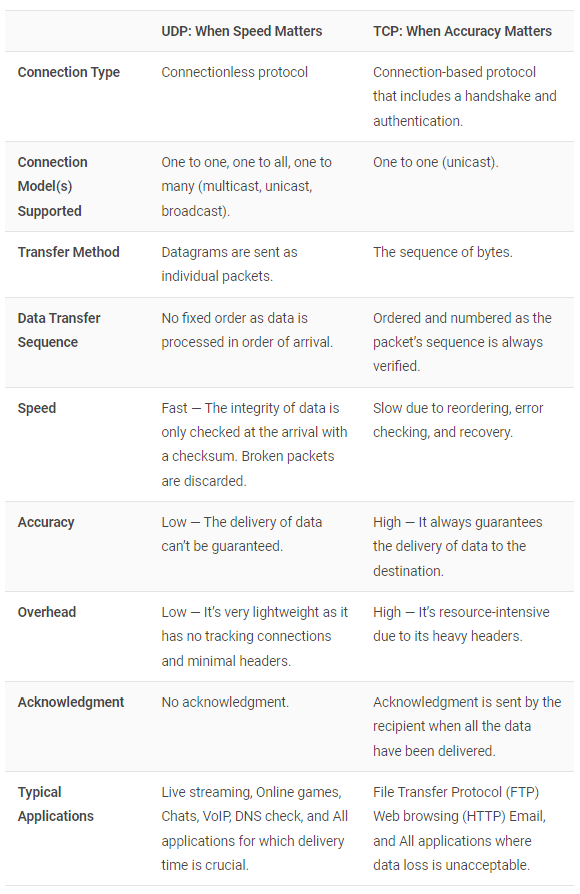

When we are talking about data transmission, two of the most well-known protocols are TCP and UDP. Both play crucial roles in networking, but their performance differs significantly depending on the application.

Let’s take a closer look at the core differences of TCP vs UDP:

- Reliability: TCP ensures data integrity through error-checking, retransmissions, and ordered delivery, making it ideal for applications that require precision, such as file transfers and web browsing. UDP, on the other hand, sacrifices reliability for speed by sending data without checking for errors or order.

- Security: While TCP includes mechanisms for error correction, UDP is more susceptible to packet loss and security risks such as spoofing and DDoS attacks. Newer protocols, such as QUIC and SRT, integrate advanced security measures.

- Latency: TCP introduces delays due to its three-way handshake and congestion control mechanisms, while UDP’s connectionless approach allows for ultra-low latency, making it ideal for real-time applications like video streaming and online gaming.

- Suitability for Streaming: UDP is preferred for live streaming and interactive applications due to its speed and low latency, whereas TCP is used for video-on-demand (VOD) services where data integrity is critical (e.g., Netflix uses TCP to ensure high-quality playback).

Here is a detailed comparison between TCP and UDP with the other emerging protocols:

| Protocol | Reliability | Latency | Security | Suitability for Applications |

|---|---|---|---|---|

| TCP | High reliability; ensures error correction, packet ordering, and retransmission | High latency due to error checking and reordering | Offers built-in security features with TLS/SSL encryption | Best for file transfers, web browsing, email, and applications requiring accurate data delivery |

| UDP | Low reliability; no error checking, packets can be lost or arrive out of order | Low latency, ideal for time-sensitive applications | No built-in security; requires external security layers | Best for real-time streaming (live video, gaming, VoIP), DNS, and applications where occasional packet loss is acceptable |

| QUIC | High reliability; uses packet-level retransmission and error correction like TCP | Very low latency (faster connection establishment than TCP) | Built-in encryption via TLS 1.3 for secure communication | Ideal for web applications, video streaming, and services needing fast, reliable connections like Google services, YouTube, and Cloudflare |

| SRT | High reliability; automatic retransmission and error correction even over unreliable networks | Low latency, designed for long-distance live video streaming | Provides end-to-end encryption and secure key exchange | Perfect for live streaming, especially in unreliable network conditions (e.g., remote broadcasts, sports events) |

| RIST | High reliability; offers forward error correction and retransmission for lost packets | Low latency; optimized for low-latency live streaming | Includes encryption for secure transmission | Ideal for broadcast and live streaming applications, especially in professional environments with critical reliability requirements |

| WebRTC | Moderate reliability; uses FEC and retransmission for better reliability | Very low latency; optimized for real-time communication | Supports encryption (DTLS and SRTP) for secure communication | Best for peer-to-peer video conferencing, live streaming, and real-time applications (e.g., Zoom, Skype, and live interactions) |

| E-RTMP | Moderate reliability; supports modern codecs and error correction but may not be as reliable as TCP | Low to moderate latency; improvements over traditional RTMP | Supports encryption and security features | Ideal for adaptive bitrate streaming, multitrack audio/video, and professional live streaming (e.g., social media, gaming platforms) |

| HESP | High reliability with adaptive bitrate control for low-latency environments | Extremely low latency (sub-second); designed to challenge traditional protocols | Supports encryption and secure data transmission | Best for ultra-low-latency live streaming, real-time video distribution, and interactive broadcasts (e.g., gaming, eSports, news) |

Future Trends and Considerations in Streaming Protocols

Several emerging trends and technologies are shaping the future of networking and protocol selection. These trends focus on enhancing the performance, reliability, and security of streaming protocols. At the same time, they work on accommodating the growing need for faster, more efficient network management.

Multipath TCP

One of the trends is Multipath TCP (MPTCP). It is an extension of the traditional TCP protocol that allows a single connection to use multiple network paths simultaneously. The standard TCP relies on a single path between the client and server. However, MPTCP can dynamically distribute traffic across multiple paths, such as Wi-Fi, 4G, or 5G connections, based on network availability and performance.

It has an undeniable impact of streaming protocols in the following ways:

- MPTCP helps to overcome network congestion, packet loss, and service interruptions by seamlessly switching between available network paths

- It can increase overall throughput, making it suitable for high-bandwidth applications like ultra-high-definition video streaming, immersive gaming, and cloud-based services.

- It could significantly enhance the quality of streaming experiences in environments where network conditions fluctuate, such as during mobile video calls or live video broadcasting from remote locations.

AI Integration in Networking

AI-driven tools can predict traffic patterns, optimize data routing, detect anomalies, and even adapt streaming protocols in real-time to accommodate network conditions. This integration is poised to improve streaming experiences by dynamically adjusting network settings and protocols based on traffic demands and user behavior.

AI can automatically adjust the parameters of streaming protocols to improve performance. For instance, it can help in dynamically switching between protocols (like TCP and UDP) based on the real-time network conditions, ensuring the best possible balance of reliability and low latency

By leveraging machine learning algorithms, AI can predict network congestion and proactively allocate resources. This will help to prioritize streaming traffic and allow viewers to experience minimal buffering or interruptions. AI can also be used to detect network failures or disruptions and take corrective actions by switching to backup paths, protocols, or rerouting traffic.

FAQs

1. Why is UDP preferred over TCP for live video streaming?

UDP is preferred for live video streaming due to its low latency and faster data transmission. It doesn’t require establishing a connection like TCP, making it more suitable for real-time, time-sensitive content, where minor packet loss is acceptable.

2. How does TCP impact video quality in on-demand streaming services like Netflix and YouTube?

TCP ensures reliable delivery of data, correcting packet loss and maintaining data integrity. This improves video quality by preventing interruptions and buffering, ensuring that videos are delivered in sequence without errors, but at the cost of higher latency.

3. What advancements have been made in UDP-based streaming for improved reliability?

New techniques, such as forward error correction (FEC) and application-layer protocols like SRT, have improved UDP’s reliability, minimizing packet loss and ensuring better video quality for live streaming without compromising low latency.

4. How does AI influence TCP and UDP performance in video streaming?

AI optimizes TCP and UDP performance by dynamically adjusting streaming protocols based on network conditions. It can predict traffic patterns, detect issues in real-time, and prioritize data, leading to enhanced video quality and reduced buffering.

5. Can TCP be optimized for low-latency live streaming?

Yes, TCP can be optimized for low-latency live streaming using techniques such as selective acknowledgments (SACKs), reduced retransmission times, and tuning the congestion control algorithms to prioritize low-latency over reliability.

6. What role do hybrid streaming protocols play in balancing TCP and UDP advantages?

Hybrid streaming protocols combine the strengths of both TCP and UDP, offering the reliability of TCP and the low latency of UDP. They dynamically choose the best protocol based on network conditions, ensuring smooth streaming with minimal buffering.

7. How do businesses choose between TCP and UDP for video streaming applications?

Businesses choose between TCP and UDP based on their specific needs. For on-demand video streaming, TCP is preferred for reliable delivery, while UDP is ideal for live streaming where low latency is crucial and minor packet loss is acceptable

8. Will emerging protocols like QUIC and RIST replace traditional TCP and UDP for streaming?

Emerging protocols like QUIC and RIST offer significant improvements in speed, security, and low latency, but they are not likely to completely replace TCP and UDP. Instead, they will complement or enhance them for specific use cases, providing more efficient solutions for modern streaming needs.

Conclusion

As streaming demands continue to grow, adopting the right protocol can make a significant difference in performance, security, and user experience

UDP and TCP are two important protocols that continue to have a profound impact on streaming ecosystems. Understanding when to use UDP vs TCP means businesses and content creators can optimise their streaming strategies. While TCP ensures data reliability, UDP’s speed advantage makes it the go-to option for real-time applications where low latency is crucial.

If you’re ready to find out how our optimized live streaming platform can change your business, check out Dacast’s 14-day free trial (no credit card required) and see for yourself. Just click the button below, and you’ll be signed up in minutes.

Thanks for reading. If you have any questions or experiences to share, please let us know in the chat box below. And for regular tips on live streaming, join our LinkedIn group.

Connect

Connect

Events

Events Business

Business Organizations

Organizations Entertainment and Media

Entertainment and Media API

API Tools

Tools Learning Center

Learning Center Support

Support Support Articles

Support Articles