CEA-608 vs. CEA-708: How to Add Closed Captions to a Live Stream [2025 Update]

There are roughly 466 million deaf or hard-of-hearing people in the world today. These people often require closed captions to understand what is taking place in videos.

In many places, accommodating these viewers with captions is a legal requirement. However, many brands are becoming more inclusive and courteous by making their content accessible to a larger audience.

In this post, we will discuss everything you need to know about CEA-608 and CEA-708 closed captions. We’ll start by looking at what closed captions are. Then, we’ll discuss why closed captions are important.

From there, we’ll look at the two main US legal standards for closed captions: CEA-608 and CEA-708. We’ll close by looking at how to use closed captions with live streaming and Dacast’s support for closed captions when using our streaming platform.

Table of Contents:

- What are Closed Captions?

- Why are Captions Important?

- The Rise of AI-Generated Captions

- What are CEA-608 and CEA 708?

- Use Cases for Closed Captions in Business

- Closed Captioning for OTT and Adaptive Streaming

- How to Add Captions to a Live Stream

- How to Add Closed Captions on VOD Content on Dacast

- Streaming Video Provider Support for Closed Captions

- SEO and Compliance Benefits of Closed Captions

- Future of Closed Captioning

- Conclusion

What are Closed Captions?

Closed captions (CC) are similar to subtitles. They refer to the embedded text in a video stream that allows a viewer to read the text instead of listening to voices in the broadcast. It’s simply the text version of the content you would otherwise listen to.

Typically, closed captions can easily be turned on and off by the viewer. As dialogue or monologue takes place, the captions match the spoken text. Captions will also often include information about music or other important sounds that are included in the broadcast, such as booms or gunshots during an action movie, or whistles during a sporting match.

Viewers who are deaf or hard of hearing can follow along by reading with no need to listen to the live broadcast to fully understand what is happening.

The Difference Between Open Captions and Open Captions

The difference between open captions and open captions is the viewers’ ability to turn them off at their command. As we mentioned, closed captions can be turned on or off with the click of a button.

Open captions, on the other hand, cannot be turned off. They simply stay on the screen.

Why are Captions Important?

Captions are important for a few different reasons. First, embedding captions is the right thing to do. It builds rapport with the audience that requires this accommodation and allows you to know you’re helping those who need it.

Captions can help grow your audience. Around 15% of American adults are hard-of-hearing or deaf. By making your content accessible, you can tap into this audience.

However, captions aren’t only for those who were born deaf or hard of hearing. As people age, they tend to lose their hearing. Millions of elderly people rely on closed captions to access information via video content.

Additionally, captions are commonly used by people who are trying to learn a new language. TVs located in noisy environments, like sports bars, are also used.

Therefore, to maximize your audience size, you should include closed captions.

Captions are also often a legal standard in many nations. In the United States, in particular, all television broadcasts are required to include closed captions. In addition, online broadcasts that are shown concurrently or near-concurrently (within 12 hours) on TV are required to include closed captions as well.

That said, it’s important to choose a live video provider with a streaming setup that supports closed captions so that you can comply with your local guidelines and restrictions.

The Rise of AI-Generated Captions

AI-generated captions are gaining popularity due to their ability to reduce costs, speed up deployment, and support multilingual content. As businesses and broadcasters focus on accessibility, AI-powered tools for live transcription are a cost-effective way to ensure captions are available in real-time. With machine learning captions for video, these tools can quickly adapt to various languages and dialects, making OTT video accessibility more scalable. This is especially important for meeting the growing demand for WCAG 2.1 captions and ADA compliance video streaming.

However, AI captions can struggle with accuracy, especially in context-sensitive situations like technical jargon or proper names. For this reason, many organizations are turning to hybrid workflows, combining human review with AI tools to ensure the final captions meet broadcasting standards 2025, like SMPTE-TT captions and ATSC 3.0 captions.

What are CEA-608 and CEA-708?

CEA-608 and CEA-708 both refer to legal standards in the United States, Canada, and Mexico for closed captioning of TV broadcasts. However, both standards are commonly used worldwide.

CEA-608 (sometimes called EIA-608 or “Line 21” captions) is an older standard and was introduced following lawsuits and legislation aimed at making TV programs accessible to people who are deaf or hard of hearing. This standard was cemented in 1990 with the passage of the Television Decoder Circuitry Act.

CEA-708 is the updated standard, which includes a wider array of features and options. CEA-708 is the wave of the future since it meets FCC (Federal Communications Commission) standards for closed captions that were introduced in 2014 and CEA-608 does not.

Let’s take a closer look at what each of these captioning standards means for broadcasters.

CEA-608 (Line 21) Captions

When CEA-608 captions are embedded, they are easy to distinguish by their black box background with uppercase white text. You’ve probably seen this at some point in your life since these captions are commonly used throughout the world.

CEA-608 includes four channels of caption information. This means, for example, that captions could be broadcast in four languages simultaneously. Often, the first channel is used for English captions and the second is used for Spanish.

Another notable aspect of CEA-608 captions is that the fonts, positioning, and text sizes are fixed, which means that broadcasters can’t customize them to their liking.

The original CEA-608 standard supports English, Spanish, French, Portuguese, Italian, German, and Dutch. The standard was also updated to support Korean and Japanese, which are languages that require two bytes for each character in the alphabet.

CEA-608 is becoming less common as digital TV becomes more prevalent since it doesn’t adhere to the most recent regulations in the United States.

CEA-708 Captions

CEA-708 is a newer, updated standard built for the digital television era. The 708 standard supports all the features of CEA-608, plus a new range of features. This includes a wider range of character sets, support for many different caption languages simultaneously, and caption positioning options.

Positioning is important because FCC regulations state that captions cannot block other important on-screen information.

Viewers can also select between 8 fonts, 3 text sizes, and 64 text/background colors. Drop shadow, background opacity, and text edges can also be customized. This makes it easier for viewers with unique needs to customize the way that they view content.

Since CEA-708 supports any characters, it can be used for captioning in just about any language.

Note that it’s possible to include CEA-608 captions in a digital TV broadcast, but you can’t use CEA-708 captions with analog transmission.

Use Cases for Closed Captions in Business

Education: LMS integrations, ADA compliance

Closed captions support students of all abilities, especially in e-learning platforms and virtual classrooms. With ADA compliance video streaming now expected, using video captioning standards 2025 like IMSC1 captioning and TTML captions ensures accessibility. Dacast’s support for LMS integrations makes it easier for schools to embed compliant videos with captions directly into their courses.

Corporate Training: Multilingual teams

Businesses training global teams need captions to bridge language gaps. AI-generated captions and machine learning captions for video improve accuracy and reduce effort. Captioning software with AI also helps create quick translations, making training videos more accessible across locations.

Events and Webinars: Global accessibility

Live events and webinars reach wider audiences with OTT closed captions and HLS WebVTT subtitles. Tools like live transcription AI and SMPTE-TT captions enhance real-time engagement. OTT video accessibility features, including CVAA closed captioning and WCAG 2.1 captions, ensure that no viewer is left behind.

Government and Legal: Section 508 compliance

Government and legal content must follow strict accessibility laws, including Section 508 and CVAA. Using ATSC 3.0 captions and broadcast captioning standards 2025 helps ensure compliance. Platforms like Dacast that support captioning for compliance simplify meeting these legal requirements.

Closed Captioning for OTT and Adaptive Streaming

In 2025, closed captioning has evolved to meet the demands of OTT (over-the-top) platforms and adaptive streaming protocols like HLS and MPEG-DASH. Both HLS closed captions and MPEG-DASH subtitles support WebVTT for streaming subtitles, enabling more accurate and efficient caption delivery. This technology ensures accessibility for viewers using a variety of devices and network conditions.

Closed captions for video are crucial for meeting accessibility standards, including CVAA compliance in the U.S. and WCAG 2.1 guidelines globally. They play a significant role in making live and on-demand video content accessible to a wider audience, including those with hearing impairments.

There’s also a key distinction between embedded captions (such as CEA-608 and CEA-708) and sidecar caption files like WebVTT or SRT. Embedded captions are typically part of the video stream, while sidecar files are separate, offering more flexibility in delivery and editing.

How to Add Captions to a Live Stream

Adding closed captions to a live stream may sound complex, but with the right tools, it’s more accessible than ever in 2025. Most live captioning is handled at the encoder level or through integrated workflows with live transcription providers.

Here’s a simplified version of how the process works:

- Video is captured using cameras connected to live streaming software or a hardware encoder.

- The encoder sends audio to a captioning tool—either built-in or external.

- A captioning service (using AI tools, human transcribers, or both) turns the audio into text in real time.

- The text is synced with the video feed and sent back to the encoder.

- Captions are then embedded in the stream, often through SDI or IP-based workflows, and sent to your video platform—like Dacast—for broadcasting.

Some systems add a short delay to better sync the captions with the spoken audio. This ensures your audience sees accurate and timely captions without major lag.

Closed Captioning Software Options

Here are a few of the top closed captioning software options:

As you research and compare other options, be sure to focus on platforms that offer support for live captioning specifically.

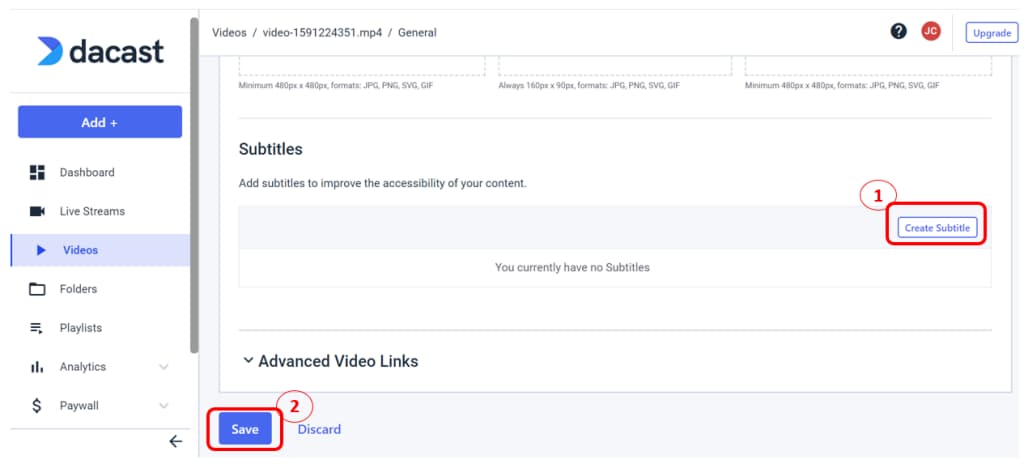

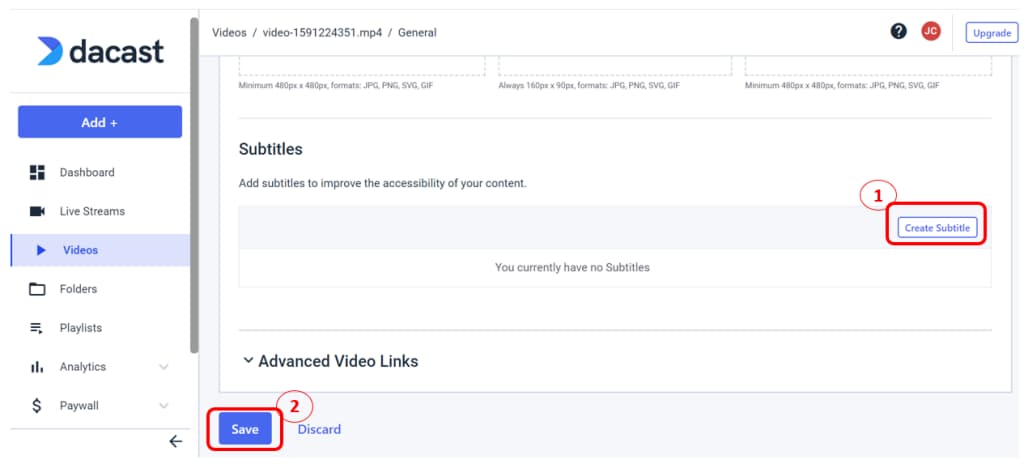

How to Add Closed Captions on VOD Content on Dacast

Dacast allows broadcasters to add subtitles to on-demand video files without the integration of third-party software or an encoder.

To add subtitles to your VOD content, click on the video file you wish to modify. Go to the “General” tab, and scroll down to “Subtitles”:

If you don’t already have them, these files are easy to create in a simple text editor.

Here are two examples of WebVTT and SRT files.

WebVTT Subtitle File Example

VTT files are very easy to create using Notepad or any other plain text editor program. Below is an example of what a cue looks like for a VTT file. The first number is when the subtitle is supposed to appear. The second timestamp is when the subtitle is supposed to disappear:

00:00:06,000 –> 00:00:11,000

I hear there is a motion in the wind.

00:00:12,500 –> 00:00:16,500

Yes, I have heard it as well. Like a soft breeze of change.

00:00:18,500 –> 00:00:24,000

Isn’t this example a little too poetic for subtitle creation?

00:00:25,500 –> 00:00:34,000

Very, but it still gets the point across.

If you want to use languages other than English, the VTT files must be saved using UTF8 encoding to have characters displayed properly.

SRT Subtitle Example

Here is an SRT example. It functions similarly to the timestamp method of the VTT files.

Entries must be formatted like this:

- Subtitle Number: 10

- Start Time –> End Time

- Subtitle text

- Blank line

This is what it will look like in action:

001

00:15:25.000 –> 00:15:29.000

This is a test subtitle A

002

00:15:29.000 –> 00:15:32.000

This is a test subtitle B

The example above would make the first subtitle (test subtitle A) appear at 15 minutes and 25 seconds in video playback. It would disappear at exactly 15 minutes and 29 seconds.

The second subtitle (test subtitle B) would appear at 15 minutes and 29 seconds, and then it would disappear at 15 minutes and 32 seconds exactly.

Streaming Video Provider Support for Closed Captions

Most online video players support subtitles in the WebVTT format. This is the standard for online captions and subtitles. However, if you’re already creating CEA-608/CEA-708 captions, converting these into WebVTT is an additional burdensome step.

Here at Dacast, our HTML5 video player has native support for CEA-608 and CEA-708 closed captions. Our player will automatically detect 608/708 captions that are embedded in H.264 video packages. No configuration is required. They will automatically be added to your videos. In the video player, a clearly marked button allows viewers to easily turn captions on and off, or to select their preferred language.

If you are already delivering your content via digital TV, sharing closed-captioned content with Dacast couldn’t be easier. This is just one of the many ways we are working to provide professional features to our broadcasters.

SEO and Compliance Benefits of Closed Captions

Closed captions do more than support accessibility—they can boost your video content’s visibility in search engines. Search engines cannot watch videos, but they can index text. Adding captions or streaming subtitles in formats like WebVTT makes your live and on-demand videos easier to find online. Captions also help your content meet legal standards, including ADA and FCC compliance, which is especially important for broadcasters and enterprise video users.

In 2025, AI tools and live transcription make it easier than ever to stay compliant and reach more viewers. Platforms like Dacast support modern captioning workflows, helping you meet standards while improving user experience.

Future of Closed Captioning

IMSC (TTML-based) and CEA-608/708 Carried in MPEG-4 Streams for OTT/IP Delivery

IMSC (International Markup Language for Subtitles and Captions) is gaining traction in OTT/IP video streaming. IMSC1, which is TTML-based, is a modern solution for delivering closed captions in streaming video. It’s often carried in MPEG-4 streams, ensuring compatibility with newer video formats. As video captioning standards continue to evolve in 2025, more platforms and broadcasters are adopting IMSC1 for live stream closed captioning. It supports multiple languages and is well-suited for accessibility, making it ideal for OTT services like Dacast.

WebVTT and SMPTE-TT for HTML5, HLS, and DASH Streaming

WebVTT (Web Video Text Tracks) and SMPTE-TT (Society of Motion Picture and Television Engineers Timed Text) are essential in the modern landscape of video captioning. WebVTT for live streaming is now widely used in HTML5, HLS (HTTP Live Streaming), and MPEG-DASH (Dynamic Adaptive Streaming over HTTP) for delivering captions on the web. As video accessibility regulations become stricter, these formats are becoming standard for OTT services. They ensure compliance with WCAG 2.1 and ADA video compliance, supporting features like real-time speech-to-text captions and automated closed captions with AI-powered tools.

ARIB Standards in Asia (e.g., ARIB B37 for Japan)

In Asia, ARIB (Association of Radio Industries and Businesses) standards are crucial for video captioning. For instance, ARIB B37 is widely used in Japan to ensure accessibility for broadcast and streaming platforms. These standards offer closed captioning for video content, ensuring compliance with regional regulations and supporting video captioning for accessibility. As video captioning trends shift toward global uniformity, ARIB standards play a key role in maintaining accessibility across various platforms in Asia.

ATSC 3.0 (NextGen TV), Which Supports IMSC1 for Captions and Subtitles in UHD/4K Live Streams

ATSC 3.0, or NextGen TV, is the future of broadcast television in the U.S. and beyond. It supports IMSC1 captions and subtitles, providing high-quality accessibility in UHD/4K live streams. As broadcasting moves toward higher resolution content, next-gen video captioning standards like IMSC1 are essential. This shift enables broadcasters to enhance their content with accurate and synchronized captions, ensuring compliance with FCC captioning requirements. The adoption of ATSC 3.0 is pushing the boundaries of accessibility in live streaming, offering new opportunities for businesses to engage wider audiences with ADA-compliant video content.

Future of Closed Captioning Standards

Closed captioning is moving beyond legacy formats like CEA-608 and CEA-708. Newer standards such as WebVTT, IMSC1 (a profile of IMSC), and SMPTE-TT offer better support for styling, timing, and device compatibility. These formats also allow for multilingual captions by using metadata tagging—making it easier to serve global audiences. ATSC 3.0, the next-gen TV broadcast standard, supports all these modern formats for hybrid delivery across broadcast and IP networks. For video streaming platforms like Dacast, adopting these standards ensures long-term flexibility and accessibility.

Conclusion

Hopefully, this article has introduced you to the world of closed captions. Captioning your content can be a legal necessity, but can also be a major boost for the quality of your content and your viewers’ experience.

Are you looking for a live streaming video provider with support for CEA-608 (Line 21) and CEA-708 captions? One great option is Dacast. We offer streaming via top-tier CDNs, some of the largest in the world. Our live streaming platform also includes a wide range of other professional/OTT features, such as ad-insertion and real-time analytics, all at competitive prices.

Want to give the Dacast live streaming video provider a try? Sign up now for a 14-day free trial, no credit card required!

Looking for more live streaming tips and exclusive offers? Why not join our LinkedIn group? Thanks for reading, and good luck with your live streams.

Connect

Connect

Events

Events Business

Business Organizations

Organizations Entertainment and Media

Entertainment and Media API

API Tools

Tools Learning Center

Learning Center Support

Support Support Articles

Support Articles